Data collection is a vital part of artificial intelligence (AI) and machine learning models, yet it’s such a sensitive process. Errors in data collection, misunderstandings, and inaccurate or inconsistent data quality can plague organizations trying to train models on specific information.

When it comes to speech recognition, there are complex challenges that can make data collection trickier than expected. Even today, speech-to-text systems aren’t 100% accurate, with popular systems like Amazon’s technology clocking an error rate of 18.42%. When using collected data to inform important decisions, the data needs to be reliable, accurate, and well-rounded. However, with the right tools and best practices, organizations can make sure that the data going into their AI models is reliable, leading to more accurate outcomes.

In this post, we’ll take a look at some best practices teams can implement to seamlessly gather data.

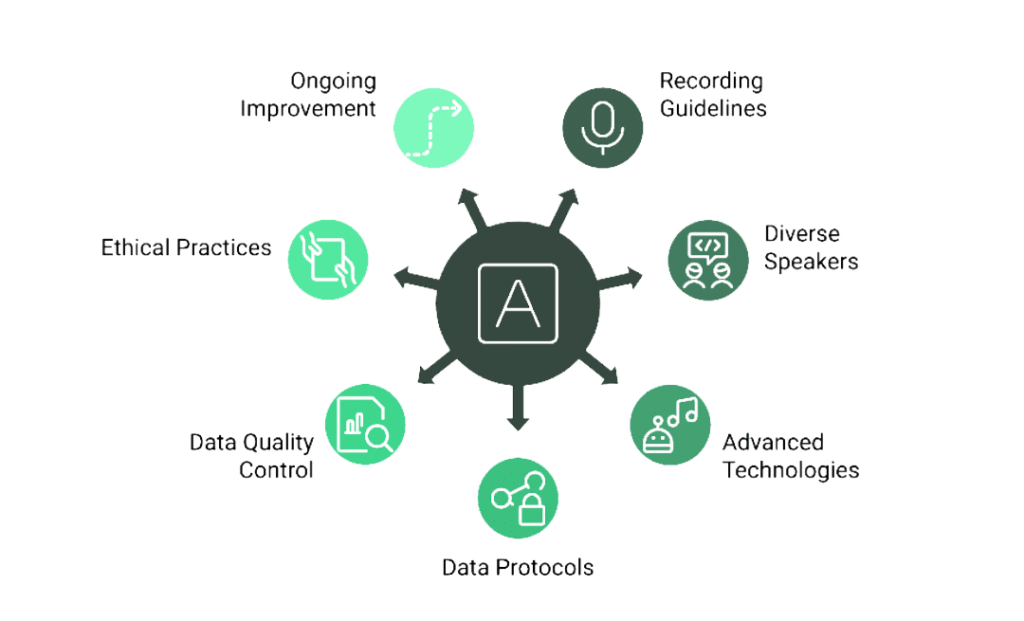

Best Practices to Improve Data Collection

To improve data collection, especially through speech, teams need to address certain challenges like cultural diversity, environmental noise, data quality, and ethical considerations. By adopting best practices as new standards, organizations can make sure the data they collect is reliable and compliant. Here are some key strategies to enhance speech data collection.

1. Set Clear Recording Guidelines

When data is recorded inconsistently, such as errors in transcriptions, unclear recordings, or not enough diverse speech samples, insights get distorted. It’s important to make sure recording standards are always upheld to get uniform speech samples as well as broad representation in datasets for higher accuracy.

Establishing standardized recording protocols can help with consistent data quality. In practice, this is a combination of hardware and best practices, such as:

- Using specific equipment

- Recording in the right environment

- Giving the speaker instructions to minimize variability

- Being cautious of errors during recording

2. Include a Diverse Range of Speakers

Speech AI systems can struggle when presented with different accents, dialects, and languages, leading to a reduction in data accuracy. If a speech recognition system is optimized for one dialect, it can fail to recognize nuances in another, which leads to misinterpretations.

Incorporating speech samples from people of various cultural and linguistic backgrounds during the training process and beyond can help make sure datasets reflect real-world diversity. Not only does it improve inclusivity in professional settings, but it also helps AI systems handle accents, dialects, and unique speech patterns.

3. Leverage Advanced Technologies

There are several tools available that make it easier to gather reliable speech samples and can lead to more accurate data collection, such as:

- Noise reduction tools: Filter out background noise to get cleaner audio recordings in noisy environments

- Speech recognition software: These systems specialize in recognizing diverse accents and dialects to reduce transcription errors

- Synthetic voice generation: Use audio tools to simulate and evaluate audio quality to improve data accuracy overall

4. Implement Special Data Protocols

Protecting sensitive user data and addressing privacy concerns is critical to in still trust in users and comply with regulations. Organizations require clear data usage policies to comply with regulations like GDPR or HIPAA, as well as robust encryption, anonymization methods and compliance measures that align with specific regulations your industry may require. This helps make sure data isn’t misused.

Without addressing privacy concerns, companies can open themselves up to legal consequences.

5. Speech Recognition Accuracy

Slang, dialects, regionalisms, or different pronunciations can be challenging for speech recognition systems. By working to continuously refine and contextualize speech samples, AI models can improve their understanding across various speech patterns.

6. Data Quality Control

Regularly review and validate data to catch inconsistencies, transcription mistakes, or incomplete recordings. Automating this quality control process with smart tools can improve dataset integrity over time.

7. Encourage Ethical Practices

Capturing and storing speech data comes with ethical issues, such as getting informed consent and being transparent about data usage. For organizations to overcome these ethical dilemmas, they need to prioritize user rights and adopt ethical standards and practices to guarantee trust and compliance.

Make sure your organization has informed consent from participants and stay transparent on how data is used. You can build trust with users by giving them insights into your ethical standards in your data collection efforts.

8. Ongoing Training and Improvement

Continuously refine AI models by introducing new datasets and by gathering feedback to address gaps. Over time, accuracy and speech recognition capabilities will improve if users and employees are trained properly on best practices.

Looking Ahead to Speech Data Collection Trends

As technology adapts and evolves, speech recognition systems are becoming smarter and more accurate, removing certain challenges and roadblocks from the equation. For example, aiOla speech AI can accurately detect speech in over 120 languages, including different accents and dialects, even in noisy environments. As technologies become better at gathering reliable data, the potential for growth and development based on insights from collected data is endless.

Book a demo with one of our experts to learn more about how aiOla accurately collects speech data.