Overview: aiOla is redefining how enterprises interact with technology in the real world – by making voice the primary interface for enterprise AI.

Built on the NVIDIA Enterprise AI Factory validated design — a full-stack reference combining accelerated computing, networking, and AI software from NVIDIA with a broad ecosystem of partners — aiOla empowers organizations to run scalable, on-premise AI solutions tailored for operational excellence.

aiOla specializes in speech-driven automation, transforming how enterprises interact with technology. Its flagship solution, a sophisticated voice agent, empowers users to complete complex, form-based tasks entirely through natural speech. This approach streamlines documentation workflows and boosts efficiency in fields ranging from healthcare to logistics. It also fundamentally changes how spoken information becomes structured, actionable data, significantly reducing reliance on manual data entry.

The core challenge aiOla tackles is the advanced handling of jargon-rich, domain-specific speech – a critical hurdle in industries like manufacturing, aviation, and pharmaceuticals. Traditional ASR systems often falter with the nuanced technical language, acronyms, and abbreviations prevalent in these demanding, often noisy, environments.

To master this, aiOla has engineered a real-time keyword spotting (KWS) mechanism directly within its speech pipeline. This intelligent system continuously identifies specialized terms as they are spoken, dynamically guiding the ASR engine for exceptionally high-fidelity transcription. The outcome is dramatically improved accuracy in high-stakes, domain-specific workflows. This ensures that no critical information is missed or misinterpreted, and that voice truly becomes a reliable input for complex operational systems.

Enterprise Voice AI in Action: Transforming Voice into Actionable Safety Reports

For global enterprises in aviation, manufacturing, automotive and logistics, form-filling is one of the most common–but least optimized–tasks in day-to-day operations. aiOla is changing that by combining real-time natural speech recognition with scalable LLM inference built with NVIDIA NIM microservices.

In this demo, we showcase a real-world use case at a leading global airline, where frontline personnel are responsible for handling safety reports. The solution enables any employee to report a safety incident using natural language, in their preferred language–in this case, English or Spanish. The system then generates a bilingual report that includes a detailed description of the incident along with a suggested resolution. This streamlines the reporting process and ensures that logistics teams are promptly notified. Our voice agent allows staff to complete the entire process hands-free, in real time, using natural speech in challenging, noisy environments. The system captures spoken input in multiple languages via domain-trained ASR, then feeds it directly into an LLM deployed as an NVIDIA NIM, which intelligently parses and auto-fills the structured form.

An airline demo for handling safety reports in real-time

What this unlocks:

- Faster reporting with zero typing or manual duplication

- Hands-free efficiency–ideal for high-volume, on-the-move environments

- Enterprise-grade deployment with scalable inference built with NVIDIA NIM

- Fewer errors, lower cost, and a better customer experience

This is more than transcription – it’s a tightly integrated voice-to-data pipeline that turns everyday speech into fully structured, system-ready input across any workflow.

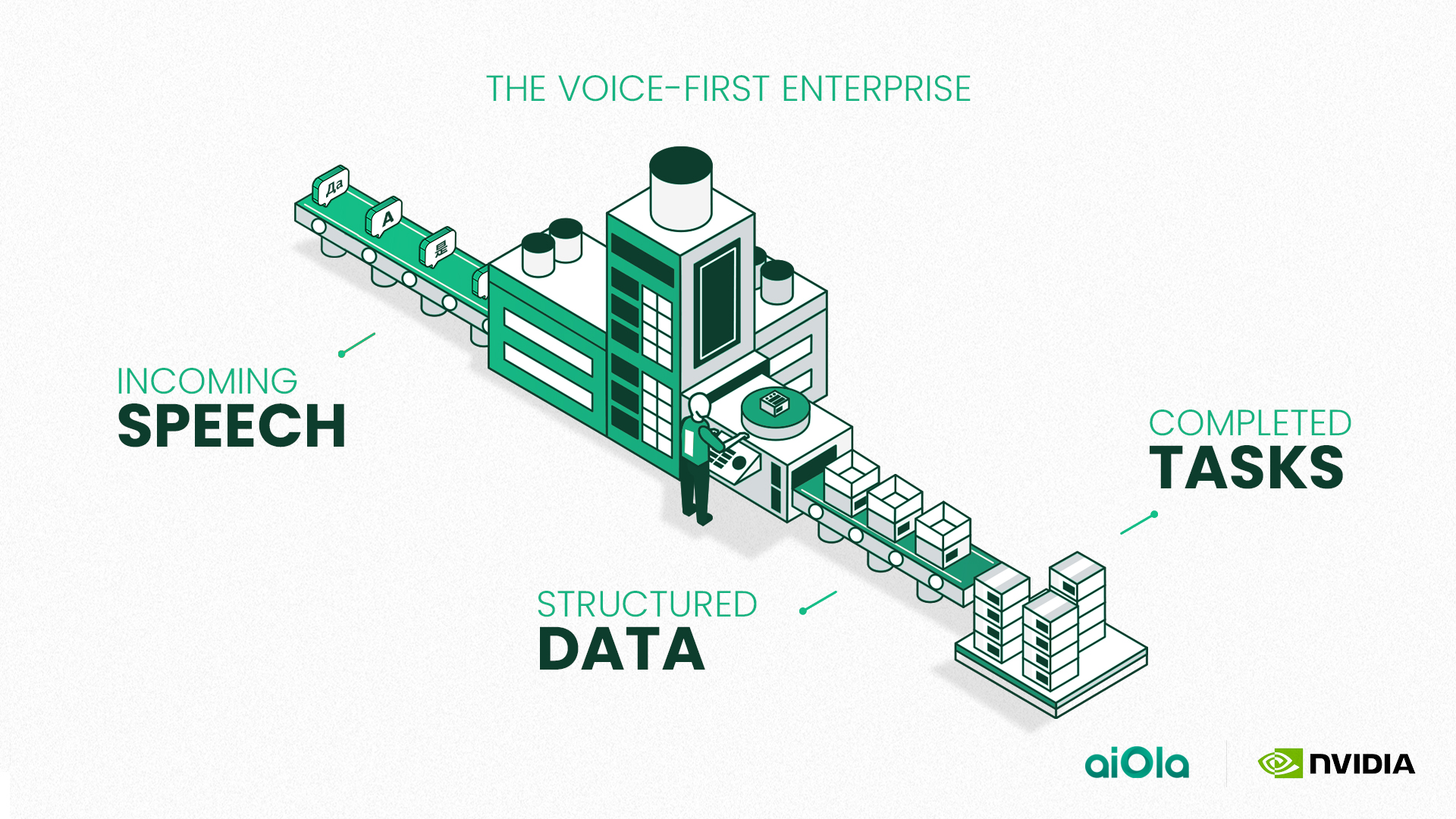

The Voice-First Enterprise: Speech as the Interface for AI

As AI moves closer to the edge—into factories, airports, hospitals, and warehouses—it becomes clear that traditional interfaces are a bottleneck. Workers aren’t sitting behind desks. They’re on the move, wearing gloves, operating machinery, or managing safety-critical tasks. In these settings, voice isn’t just convenient—it’s the only viable interface for human-computer interaction.

The “Voice-First Enterprise” isn’t a buzzword. It’s a foundational shift in how organizations operationalize AI and data workflows. At aiOla, we treat voice not as a command layer or an accessibility feature, but as a primary data source—on par with IoT streams or transactional logs. This reframing is critical. Speech, when captured correctly, is inherently rich in context, intent, and operational signal. But until recently, its unstructured nature made it difficult to use in structured business systems.

What’s changed? The convergence of several breakthroughs:

- Domain-specific ASR models capable of handling jargon, noisy settings and accents

- Real-time inference at the edge via platforms like NVIDIA TensorRT-LLM and NIM

- Advanced LLMs that can turn natural language into structured, actionable records

This stack unlocks a new interaction paradigm: speak naturally → receive structured insight → trigger automated action. It replaces not just pen and paper but also keyboards and forms with real-time, natural speech-driven data capture and process execution.

Importantly, this isn’t just faster—it’s more human. Workers don’t need to learn systems. Systems now understand them.

In practice, we’ve seen that a single voice-enabled workflow—like safety reporting or maintenance logging—can boost data capture rates by 3x, while reducing documentation time by 50%. But more than that, it sets the foundation for intelligent automation. Once voice becomes a reliable interface, it can power agents, insights, and orchestration layers across the enterprise.

This is why the Voice-First Enterprise matters: it makes AI accessible at the edge. It captures intelligence that was previously lost in hallway conversations, phone calls, or handwritten notes. And it does so in a way that is inclusive—across languages, roles, and technical skill levels.

At aiOla, we believe voice is the fastest interface to data—and the most direct path to enterprise-wide intelligence.

Conclusion

By collaborating with NVIDIA, and as a member of the NVIDIA Inception program for startups, aiOla gains access to powerful GPU infrastructure and high-performance AI tooling that directly accelerates product innovation. With NVIDIA software like TensorRT-LLM, NVIDIA Dynamo, and NIM, as well as infrastructure like the latest NVIDIA accelerated computing, aiOla is scaling faster and serving its customers more efficiently.

Through this work, aiOla is bringing the future of speech to the present, enabling smarter, faster, and more accurate voice-first applications that fit into the industrial world’s unique linguistic challenges. Learn more about aiOla’s solutions at aiola.ai.

With baseline support for ASR architectures, TensorRT-LLM makes it easy to deploy, experiment, and optimize voice AI. Together, TensorRT-LLM and Dynamo provide an indispensable toolkit for optimizing, deploying, and running LLMs and ASR models efficiently. Get started today with the TensorRT-LLM Whisper example.

Authors

Amit Bleiweiss is a senior data scientist at NVIDIA, where he focuses on large language models and generative AI. He has 25 years of experience in applied machine learning and deep learning, with over 50 patents and publications in the domain. Amit received his MSc from Hebrew University of Jerusalem, where he specialized in machine learning.

Gill Hetz is the VP of AI and Research at aiOla, where he leads the company’s technology, IP, and innovation strategy with a strong focus on applied machine learning. He holds a Ph.D. from Texas A&M University and has over 15 years of experience in data science and engineering.

His expertise includes ASR, NLP, and deep learning architectures, with a focus on machine learning–driven product development. Throughout his career, Gill has contributed to multiple organizations as a technical leader, with academic publications and patents in the fields of AI and computational modeling.

Aviv Navon is a research team lead at aiOla, where he leads the development of advanced speech technologies, including automatic speech recognition, speech synthesis, and spoken language understanding. He holds a PhD from Bar-Ilan University, where his research focused on multitask learning and equivariant weight space networks.

Aviv has over 10 years of industry experience, having worked in various roles across startups and tech companies, where he applied machine learning and statistical modeling to solve real-world data challenges across domains such as mobility, finance, and wearable technology.

Aviv Shamsian is a Data Science Tech Lead at aiOla, where he is responsible for advancing the company’s intellectual property through the development of novel technologies, academic publications, and patents. Prior to this role, he worked as a Computer Vision Researcher at Amazon, where he developed large-scale human activity recognition algorithms deployed to millions of users.

Aviv holds a Ph.D. from Bar-Ilan University, where his research focused on multi-task learning and weight space learning. He has authored over 20 academic papers, with a strong emphasis on multi-task learning methodologies and their applications in modern machine learning systems.

Asaf Buchnick is an Applied Data Scientist with a background in backend engineering, currently shaping the building blocks of a cutting-edge Conversational AI platform. His work bridges engineering and machine learning to craft intuitive, human-centric dialogue experiences.

He is also pursuing a master’s degree in Data Science at Bar-Ilan University, where he explores advanced AI methods to enhance guidance in Generative Text to Image models.